EARL 2016 Review

I was able to attend this year the main R conference in London: EARL.

It is a two day event where it is possible to attend up to 22 speeches. The structure is similar for the two days: speeches by sponsors in the morning kick start the day, followed by three chunks of three speakers.

Among the other review of this event, you can look at Willie’s note.

All in all, it was a great event, with two speeches with a demonstration of using R in a real-time analysis process, a number of new features announced by the Rstudio team and as expected a couple of very technical presentations on operational research.

Day 1, session 1: Sponsors Speeches

Joe Cheng went first and presented the news from Rstudio. The less we can say is that Rstudio had been pretty busy this year, between the release of the flexdashboard, profvis and sparkly package, the new notebook feature in Rstudio and the inclusion of key languages in classic Rmarkdown files(more to come by the Rstudio team). Sparklyr is a package which do the connection between R and spark and allow to use the dplyr functions in a spark core. An handy way to learn Spark for a r user.

The second talk was by David Davis from Microsoft, to whom the best slide goes:

Why is #rstats special? @revodavid presenting! haha copying & pasting @StackOverflow we have all done it #EARL2016 pic.twitter.com/2Fq5lcpzBU

— Alice Data (@alice_data) 14 September 2016

David’s speech was about the maturity of the R ecosystem, debunking the reputation of non-reliability of R.

The next two speakers, members of the R consortium, had buried this assertion by presenting R-hub, a platform to validate R package.

Day 1, Session 2

The first speaker of the morning was Magda , presenting how she help tuned the algorithm behind the customer journey on the Telegraph website.

She presented multiple algorithm tested to recommend articles on the telegraph and the progressive journey to “productionnise” collaborative filtering algorithm.

Tharsis came second and spoke on the emergence of a new kind of data. Tharsis use a number of website, like Google trends and twitter, to collect data and create features. After it’s speech, you can understand why not everybody use it: the way Tharsis do it imply a very complex architecture and results may be meager. But it is a subject with potential, as he managed to improve existive financial models with its features.

The third speech was by Amanda Lee on social marketing on Instagram. Unlike twitter or Facebook, Instagram does not provide a ready to use platform to analyse a post. So Amanda built a platform based on shiny to do that, taking advantage of the capabilities of R to create the metrics she needs. Among the metrics she assess are the level of influence of an user or the quality of a post, which revealed to be complex notions to grab.

Day 1, Session 3

The main speech was by Sarah Political on her journey migrating from SAS to R.

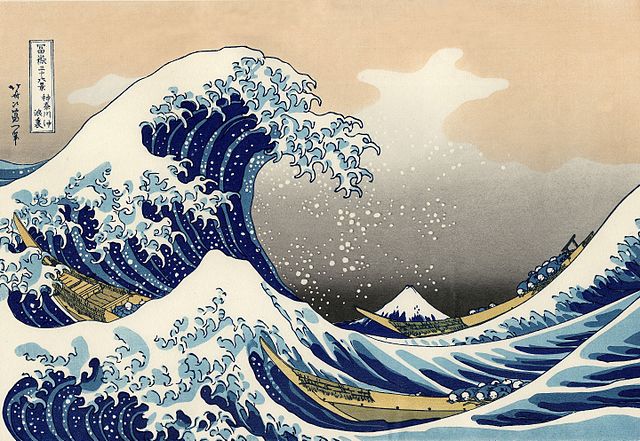

Nothing like a picture to describe the journey:

To note, here, the installation of virtual machine failed for their use of R and they had to move to physical ones.

There was something crucial discussed during the session: How to integrate R in department which was seemingly doing well without?

The three speakers were on an agreement over the fact that it needs to be done slowly, first by showing the things which could be done, then by converting the key college, the “ones which talks” and finally with continuous education until the entire team is comfortable with R.

Day 1, Session 4

The first speaker was Thomas Baynes, which work for the Ministry of Defense. His speech was about operational research applied to defense and security. His job is to reply to questions like ‘How many ships, tanks, aircraft do we need?’. And R is used to do it.

The workflow used for that is the following:

Defense Policy —> Scenarios —> War gaming/Simulation —> Concurrency Modelling —-> Procurement Decisions

The main use of R in this workflow is for the data management and the creation of data visualizations.

The challenge Thomas had faced was to deal with the R versionning in a non-admin, restricted internet environment. They ended using virtual machines isolated from the main network, which ended to be a life changer.

The third speaker was Nick Masca, for BGL Group. Nick works with insurance data and had to face a couple of challenges: detecting fraud, predicting insurance price, forecast complain type, etc.

Its speech was a precious reminder of best practice in modeling and how it goes again the common thinking that more data produce always more accurate models. Through benchmarks, he had remind us about a couple of bias which could occur: obsolescence of data, unbalanced labeled data, etc.. As well, he pointed that good models that can be made with only a small set of data.

Day 2, Session 1: Sponsors Speekers

The day was kick started by Garrett Grolemund for Rstudio. One thing that could be said on Garrett is that he knows how to be didactic: its speech was crystal clear. It was about the evolution of R in the last year and the strength and weakness of R compared to others languages. If I would have to summarize the speech in a satirical way, I would say that the title could had been “Why R is better than python in an unconditional way” (joke).

Day 2, Session 2

The first speaker was Hovhannes Khandanyan for Carlson Wagonlit Travel. The speech was on the optimization of travel and expense budget. At first sight, some could fear the subject to be boring. Nonetheless, Hovhannes had deploy such smart strategies to handle the issue that I rank its speech among the top of the day. To solve the issue of the diversity of sources, Hovaness had to use approximate matching, a similarity score and local sensitive matching. Once the database had been done, Hovaness had been able to put in motion a high number of projects: negotiations with suppliers, incentive to visitors to find local restaurants and prediction of total cost of trips.

The second speaker was Louis Vine for Funding Circle on how he and its team implement a live credit scoring in R. Internally, models were handled by data scientists only proficients in R and the results were used by the software engineers which traditionally doesn’t know about R. To solve that issue, they had created a Ruby wrapper for R scripts which act as a REST API. This allow them to run R scripts in a “productionnised” environment. Louis had put the emphasis of its speech on the good practice when “productionnise” R script: unit tests needs to be done at each score and a separate validation script needs to be run.

Day 2, Session 3

The second speaker was Thomas Kounitis for eBay, presenting a shiny application used to optimize the marketing budget across multiple channel. All marketing channel have a different model of traffic over investment. The shiny app use econometric models to optimize the bugdget repartition over marketing channels given constrain of budget.

The third speaker was Eike Brenchmann on real time analytic with R. As Louis, Eike and its team use R in their process to assess claims. When Louis and it’s team had created an API process, Eike and it’s team have R scripts at the core of their process and use java to call R scripts and get the score in JSON format.

Leave a Comment